CÉCI HPC Training

The CÉCI presents, each year, a full-fledge offer in training sessions for researchers using, or willing to use, the CÉCI clusters. The training sessions are hosted at Louvain-la-Neuve and are delivered by no less than 5 different HPC experts. The whole offer goes from baby steps into the Linux world to extreme MPI programming and GPU computing. The sessions are readily cluster-oriented, so knowledge of a programming language or of a scientific computing software is assumed. Training session are delivered in English or French.

See detailed information here.

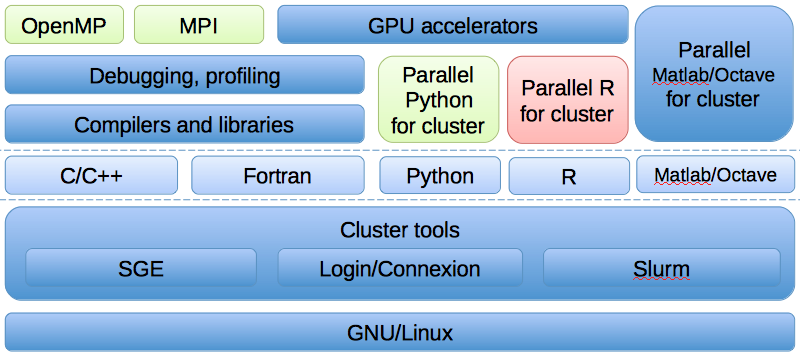

The items between the dashed lines are not offered by the CÉCI training sessions. They are considered pre-requisites for the items above them. Items in blue are organized by the CISM, items in light-green are organised by the CÉCI, and the item in red is organised by the SMCS.

The items between the dashed lines are not offered by the CÉCI training sessions. They are considered pre-requisites for the items above them. Items in blue are organized by the CISM, items in light-green are organised by the CÉCI, and the item in red is organised by the SMCS.

Basic tools

Linux

Linux is the most common operating system in the HPC world. Basic understanding of Linux and GNU commands are necessary to take advantage of the clusters. The Linux session is organized by the CISM and aimed at people new to Linux.

Login/Connexion

The CÉCI clusters use a specific, global, account creation and access process. This session explains the whole process from acquiring credentials to successfully connecting to the clusters. The session is hosted by Bertrand Chenal (FNRS).

Cluster tools

SLURM

Slurm is the job manager installed on all CÉCI clusters. The session teaches attendees how to prepare a submission script, how to submit, monitor, and manage Slurm jobs. It is delivered by the CISM.

SGE

SGE is a job manager installed on many clusters of the CÉCI universities. The session teaches attendees how to prepare a submission script, how to submit, monitor, and manage SGE jobs. It is delivered by the CISM.

Power tools

Compilers and optimized libraries

The choice of the compiler (many clusters have several, a.o. gcc, intel, etc.) is important, as is the choice of the compiling options. This sessions reviews the strengths and weaknesses of the compilers and their optimal use. It is hosted by CISM.

Debugging and profiling

Debugging is the action that often takes more than 80% of the time of the programmer so doing it right can indeed save a lot of time. Once the code is debugged, profiling it is the second action that helps reducing time. This session is hosted by CISM.

Cluster use of common software

Python

The use of Python for scientific computing is rising thanks to modules such as numpy, scipy and mathplotlib. This session explores the efficient uses of Python in that context for situations where numpy and co. are of less use. It assumes a working knowledge of Python and is delivered by Bertrand Chenal (CÉCI).

R

R is much used in the statistical community because and is becoming more and more 'parallel computing-aware' as libraries such as doMC parallelise computations transparently for the user. This session is delivered by the SMCS and assumes a little knowledge of R.

Matlab/Octave

Matlab, and its free, mostly-compatible, alternative Octave are becoming more and more suitable for cluster computing with the rise of parallel computing toolboxes and/or syntactical constructs. The session explores the many was Matlab code can be parallelised and submitted to a cluster (taking into account the tough problem of licensing). It is organized by the CISM.

Parallel programming

OpenMP

OpenMP is an easy alternative to pthreads for multithread computing. OpenMP extensions now exist in most C and Fortran compilers and allow flagging loops and other construct for efficient multithreading with little supplementary programming effort. The session is delivered by David Colignon (CÉCI)

MPI

MPI is a library for message passing between processes running on distinct computers. It offers high-level primitives for efficient communication. The session is delivered by David Colignon (CÉCI)

GPU computing

GPU computing is a rising trend in HPC ; it consists in sharing the computations between the CPU and the GPU, the graphical unit processor. Very large speedup can be obtained, but at the cost of a lot of development. But nowadays, with the advent of GPU-aware libraries such as cuBLAS for instance, reasonable speedups can be achivied at a very marginal cost. The session is delivered by the CISM.

Other related lectures

-

ULG: INFO 0939: High Performance Scientific Computing.

-

UCL MECA 2300 Compléments de méthodes numériques.